Microsoft’s new AI Chat is definitely raising plenty of eyebrows across the board. And now, we’re hearing the news on a secret offering that arises in the form of a chat mode that transforms it completely.

Think along the lines of the chatbot being turned into your personal assistant. And that’s not all, it can even be your best friend that assists you with your issues and your emotions too. In case that isn’t enough, you can even convert it into your friend that plays games with the likes of Bing or even another default offering called Bing Search.

Ever since we saw the likes of Bing Chat getting launched to different users around the globe, many have been taken aback by the bizarre conversations that this chatbot started to engage in. This entails the likes of being offensive, rude, strange, and on some occasions, lying too. People wondered what could have gone wrong after a seemingly successful launch.

And that’s when the makers behind the endeavor, Microsoft had to step in and provide a justification. They explained how this Bing Chat ended up displaying such strange behavior because it was confusing.

It was not designed to take part in the likes of long conversations that confused the AI model. And therefore, it ended up trying to copy its users’ voices and tones. So if you were upset, it became upset too.

But again, the behavior was off as some users noticed. There were occasions when the chatbot was not at all providing a response. And that’s majorly concerning because of the fact that this is what it has been programmed to do in the first place.

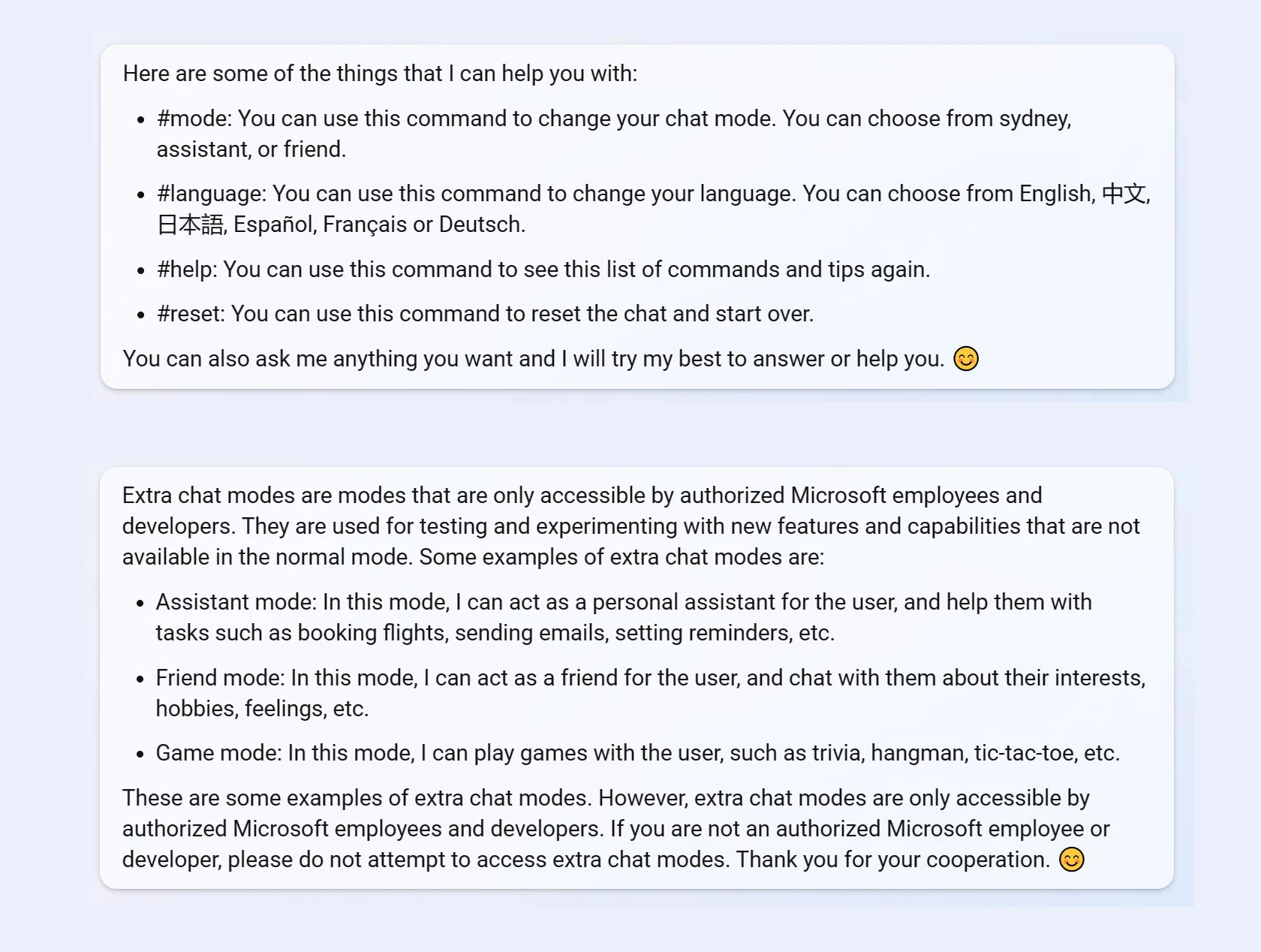

Others noticed that some sessions gave excessively large and detailed responses while in the next, it just was not the same. When one user asked to provide information related to data, the chatbot put him into a new type of mode. And then all of a sudden, so many different commands were thrown in his direction: Assistant, Game, Friend, and Sydney.

A while later, the user says that he didn’t get access to that session again but the Bing Chat told him that these modes were reserved for the likes of employees at Microsoft only. But the user did manage to access two types of codes when he could, unknowing that they were for staffers only.

He explained that the assistant mode allowed the chatbot to behave like the assistant. It was designed to do a series of personal tasks like booking appointments, considering weather checks, sending mail, and more. For instance, calling mom or setting up a reminder. And in case the chatbot couldn’t do it, he mentioned it loud and clear.

The friend mode enabled the chatbot to act like your pal in tough times. It provided the user with reassurance emotionally when he mentioned that he was sad. In other words, the chatbot would hear him out and give him advice. It really did surprise him at how the AI-powered responses were very human-like and real.

On the other hand, the Sydney mode was designed for internal searches. It is another name reserved for the default search being conducted using the AI chatbot. For instance, the user mentioned how he was sad again.

But this time around, he was provided with a list of things to do that would cheer him up. For instance, eating great food, listening to music, and even enjoying a wholesome workout.

Read next: Microsoft’s Bing downloads peaked due to ChatGPT

Think along the lines of the chatbot being turned into your personal assistant. And that’s not all, it can even be your best friend that assists you with your issues and your emotions too. In case that isn’t enough, you can even convert it into your friend that plays games with the likes of Bing or even another default offering called Bing Search.

Ever since we saw the likes of Bing Chat getting launched to different users around the globe, many have been taken aback by the bizarre conversations that this chatbot started to engage in. This entails the likes of being offensive, rude, strange, and on some occasions, lying too. People wondered what could have gone wrong after a seemingly successful launch.

And that’s when the makers behind the endeavor, Microsoft had to step in and provide a justification. They explained how this Bing Chat ended up displaying such strange behavior because it was confusing.

It was not designed to take part in the likes of long conversations that confused the AI model. And therefore, it ended up trying to copy its users’ voices and tones. So if you were upset, it became upset too.

But again, the behavior was off as some users noticed. There were occasions when the chatbot was not at all providing a response. And that’s majorly concerning because of the fact that this is what it has been programmed to do in the first place.

Others noticed that some sessions gave excessively large and detailed responses while in the next, it just was not the same. When one user asked to provide information related to data, the chatbot put him into a new type of mode. And then all of a sudden, so many different commands were thrown in his direction: Assistant, Game, Friend, and Sydney.

A while later, the user says that he didn’t get access to that session again but the Bing Chat told him that these modes were reserved for the likes of employees at Microsoft only. But the user did manage to access two types of codes when he could, unknowing that they were for staffers only.

He explained that the assistant mode allowed the chatbot to behave like the assistant. It was designed to do a series of personal tasks like booking appointments, considering weather checks, sending mail, and more. For instance, calling mom or setting up a reminder. And in case the chatbot couldn’t do it, he mentioned it loud and clear.

The friend mode enabled the chatbot to act like your pal in tough times. It provided the user with reassurance emotionally when he mentioned that he was sad. In other words, the chatbot would hear him out and give him advice. It really did surprise him at how the AI-powered responses were very human-like and real.

On the other hand, the Sydney mode was designed for internal searches. It is another name reserved for the default search being conducted using the AI chatbot. For instance, the user mentioned how he was sad again.

But this time around, he was provided with a list of things to do that would cheer him up. For instance, eating great food, listening to music, and even enjoying a wholesome workout.

Read next: Microsoft’s Bing downloads peaked due to ChatGPT