A new study from Mozilla is shedding light on YouTube’s dislike and spam buttons and how they are largely failing at stopping or curbing the unlimited amounts of spam on the app.

The news was announced by a non-profit organization this week and it’s really showing us that the platform is struggling in terms of handling unwanted information. And in the end, it’s the users who are getting awfully frustrated and quite confused.

Mozilla launched its browser extension for crowd search around two years back and that’s when the issue linked to the app’s recommendation problems was put on display.

Around 20,000 people and 500 million video-based content later, we’re getting this report today about how YouTube is really having a hard time with its own controls, and by that we mean its buttons for dislike and not interested are doing nothing in terms of altering the recommendations seen on the app’s algorithm.

People who disliked gun videos were shown even more content related to violence while those who disliked crypto content were seen being provided with even more crypto videos and that just goes to show how things aren’t really working well in the app’s favor.

One study has even gone as far as suggesting how some controls belonging to users have little to no impact in terms of putting a stop to unwanted recommendations. So why are people being shown videos that they have zero interest in seeing?

Out of the four buttons, the study showed how the one titled, ‘don’t recommend channel’ had the most impact in terms of preventing unwanted recommendations, which figures standing at 43%. On the other hand, the button for ‘remove from my watch history’ was a decent filter and removed around 29% of the content. But the button for dislike and not interested performed the worst and stood at just 12 % and 11% respectively.

When people felt that they had no chance of having control over their watch content, they either logged out, used other privacy tools, or simply made new accounts to manage the various videos on offer.

A senior researcher at the company says they really learned a lot of insight in terms of how people are not impressed with YouTube taking care of what content they wish to see. They feel the app has a lot of shortcomings in this regard and really needs to up its game. And the findings of the study simply validate it.

Studies like these are proof that the app provides little to no control to its users in terms of a say in the way the algorithm functions. And remember, Mozilla has been carrying out plenty of research on YouTube and the way its algorithm works since 2019.

And according to these wonderful and latest suggestions, YouTube needs to do a lot more if it wishes to give users their driving seat position back again. It’s almost like launching several proactive measures and providing researchers with more access to the app’s API and different tools.

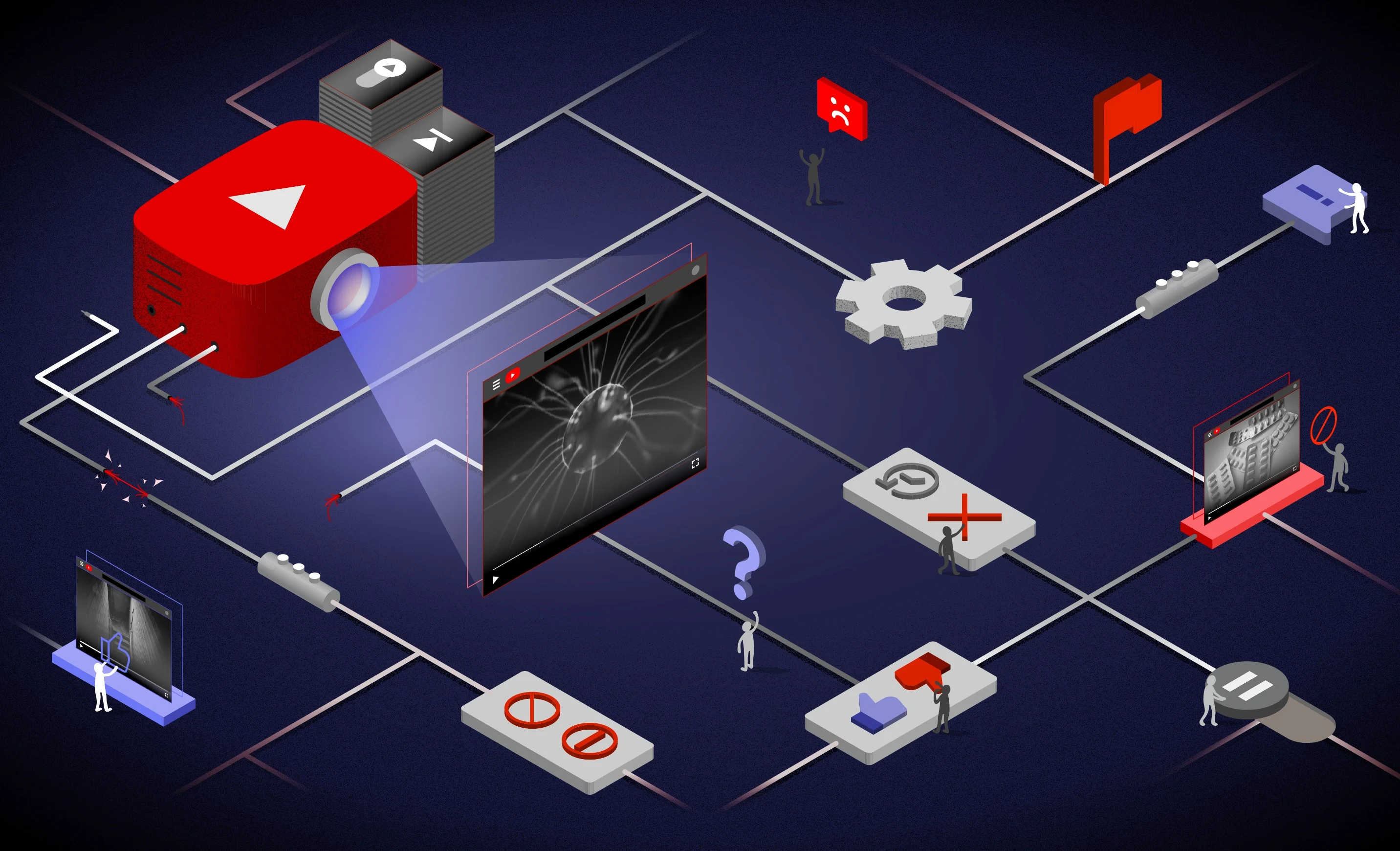

Image: Mozilla

Read next: TikTok’s Moderation System Accused Of Giving Preferential Treatment To Its Most Popular Users

The news was announced by a non-profit organization this week and it’s really showing us that the platform is struggling in terms of handling unwanted information. And in the end, it’s the users who are getting awfully frustrated and quite confused.

Mozilla launched its browser extension for crowd search around two years back and that’s when the issue linked to the app’s recommendation problems was put on display.

Around 20,000 people and 500 million video-based content later, we’re getting this report today about how YouTube is really having a hard time with its own controls, and by that we mean its buttons for dislike and not interested are doing nothing in terms of altering the recommendations seen on the app’s algorithm.

People who disliked gun videos were shown even more content related to violence while those who disliked crypto content were seen being provided with even more crypto videos and that just goes to show how things aren’t really working well in the app’s favor.

One study has even gone as far as suggesting how some controls belonging to users have little to no impact in terms of putting a stop to unwanted recommendations. So why are people being shown videos that they have zero interest in seeing?

Out of the four buttons, the study showed how the one titled, ‘don’t recommend channel’ had the most impact in terms of preventing unwanted recommendations, which figures standing at 43%. On the other hand, the button for ‘remove from my watch history’ was a decent filter and removed around 29% of the content. But the button for dislike and not interested performed the worst and stood at just 12 % and 11% respectively.

When people felt that they had no chance of having control over their watch content, they either logged out, used other privacy tools, or simply made new accounts to manage the various videos on offer.

A senior researcher at the company says they really learned a lot of insight in terms of how people are not impressed with YouTube taking care of what content they wish to see. They feel the app has a lot of shortcomings in this regard and really needs to up its game. And the findings of the study simply validate it.

Studies like these are proof that the app provides little to no control to its users in terms of a say in the way the algorithm functions. And remember, Mozilla has been carrying out plenty of research on YouTube and the way its algorithm works since 2019.

And according to these wonderful and latest suggestions, YouTube needs to do a lot more if it wishes to give users their driving seat position back again. It’s almost like launching several proactive measures and providing researchers with more access to the app’s API and different tools.

Image: Mozilla

Read next: TikTok’s Moderation System Accused Of Giving Preferential Treatment To Its Most Popular Users