As the various advanced machine-learning programs we’ve collectively taken to calling “AI” become more and more popular, questions surrounding data privacy come to the fore. From large language models (LLMs) like ChatGPT to multimodal LLMs like Gemini, there’s no doubt that these programs have the potential to make life easier for people across countless industries.

Programs like these have the capability to generate large amounts of content, whether text (including code), images, audio, video or a combination of these, with minimal time investment from the user. Not only are these programs incredibly useful in certain professional contexts, they can also be a lot of fun to experiment with, meaning that many users are just “trying out” this new technology to see what it can do.

Data privacy, whether concerning corporate or personal data, is an important consideration when it comes to expert systems like these. Users often feed large amounts of confidential data to these systems in order to contextualize prompts. They also give up personal information when registering accounts with the companies that produce these programs. Finally, users share and create data through their interactions with the programs—sometimes extremely private and sensitive information.

All this comes after the underlying models have been trained, but training data can also include personal information. The risk is that personal data, whether incorporated into a model during the training stages, shared with a model through user interaction or shared with a platform during account creation, could leak out to other users, not to mention the danger of these platforms hoarding and monetizing personal data themselves, or sharing it with others.

Incogni’s researchers put some of the most popular “AI” platforms to the test, analyzing privacy policies and other documentation. Each platform was then assessed against 11 criteria. These criteria were developed by the researchers to assess the data-privacy risks associated with using each platform. Incogni’s researchers grouped the criteria under three major categories: data collection and sharing, transparency, and training or “AI”-specific considerations.

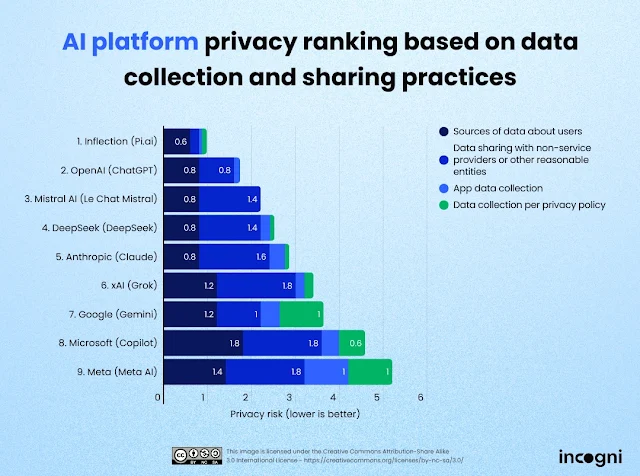

These assessments resulted in a series of privacy rankings, culminating in the following overall ranking.

In the overall ranking, Le Chat comes out on top, with its combined score for the three categories, edging ahead of ChatGPT’s and holding a comfortable lead over Grok, which comes in third. Notably, the three platforms from established players in the data-collection market, Microsoft’s Copilot, Google’s Gemini, and Meta.ai (also known as Llama) take third-last, second-last, and last place, respectively.

Drilling down into the first assessment category—data collection and sharing practices—we see these same three tech giants rounding out the bottom end of the ranking. This time in a slightly different order, with Google’s Gemini, Microsoft’s Copilot, and Meta.ai taking the bottom three places, in that order.

Two of the top three platforms are familiar from the overall ranking, with ChatGPT and Le Chat taking second and third place, respectively. Grok falls out of the top three, though, with Inflection’s Pi AI taking first place.

The criteria in this category were aimed at answering the following questions:

- What personal data can be shared with unessential entities?

- Where do these platforms source user data?

- What personal data do related apps collect and share?

The second category had to do with transparency—how easy (or difficult) each platform makes it for users to understand how their data is handled. The criteria in this category addressed the following questions:

- How clear is it whether prompts are used for training?

- How easy is it to find information about how models were trained?

- How readable are the relevant policy documents?

Once again, two of the three best performers overall make it into the top three in this category, with ChatGPT and Le Chat taking out first and second place, respectively. Grok, also from the top three overall, ties for third with Claude, effectively also making it into the top three for the transparency category.

Looking at the bottom three, repeat offender Gemini takes second-to-last place but both Copilot and Meta.ai have been displaced; instead, we see DeepSeek in third-last place and Pi AI in last.

Finally, the third and final comparison-criteria category covered “AI”-specific privacy criteria, looking for answers to the questions:

- Is user data used to train models?

- Are user prompts shared with other entities?

- What data is used to train the models?

- Can user data be removed from training datasets?

In this category, we see the greatest deviation from the overall scores with Gemini and DeepSeek tying for first place and Claude taking second. Meta.ai remains in its familiar spot in the bottom three, taking second-last place. Interestingly, ChatGPT came out in third-last place in this category while Pi AI took last place.

One important takeaway from all this is that none of the platforms studied is private or completely safe. There’s also no clear, across-the-board winner, meaning that users have to prioritize certain aspects of their data privacy and focus on those. If a user values transparency above all else, for example, he or she will likely have to make compromises in other areas, like data collection and sharing practices or training considerations.

Head of Incogni, Darius Belejevas, had this to add:

"These AI platforms aren’t going anywhere anytime soon. If anything, more are joining the fray each year. With all the benefits, excitement, and possibilities they bring, interacting with them isn’t without risk. In fact, there’s risk involved even if you don’t interact with them, given that they may be trained on datasets that include personal data that’s been collected, aggregated, and made available for model training without the data subject’s consent or even knowledge."

"Once a user starts interacting with these models, they run the risk of having information they input being captured by the company behind the model, used for training or other purposes internally, and shared with other entities (each of which may, in turn, share it with others). These platforms and programs are exciting, we just need to stay on top of data-privacy concerns to keep them safe."

Incogni’s full analysis (including public dataset) can be found here.

Read next:

• Users Will Be Able to Manage and Block Ads in WhatsApp’s Next Updates

• Human vs. AI Perception: Research Uncovers Striking Differences in Object Recognition