For a long time, search experts have wondered how artificial intelligence would change the way people use Google. Now, thanks to a new research effort, we finally have answers grounded in actual user behavior. In a new study, 70 people — diverse in age and background — were each given eight tasks to complete using Google Search. Six of those tasks led to AI-generated answers from Google, known as AI Overviews, while two did not. Every tap, scroll, click, and even verbal comment was recorded and analyzed to reveal exactly how people respond to this new search experience.

The researchers from GrowthMemo compiled over 525 recordings, of which about 400 involved interactions with AI-generated content. They didn’t just look at which links were clicked. They measured how far people scrolled, how long they lingered on different parts of the screen, when they chose to click, and how much trust they expressed in what they saw. Of the participants, 42 were on mobile devices and 27 used desktops, giving the study a good view of how device type affects behavior.

One of the most significant takeaways is how dramatically AI Overviews impact website traffic. When an AIO appeared in search results, the number of clicks to outside websites dropped. On desktop, outbound clicks fell by nearly two-thirds. On mobile, it was almost half. People didn’t need to leave the page to get answers. Nearly 88% of participants expanded the AIO by tapping the “show more” button. But their attention didn’t go much further. Although the average scroll depth was 75%, the median was only 30%—most people didn’t bother reading past the first third of the AI summary. If a source didn’t appear early in the AIO, it was effectively invisible.

Rather than reading the summaries word-for-word, most participants skimmed. About 86% quickly scanned the content for helpful points or recognizable sources. On average, people spent 30 to 45 seconds engaging with the AIO. That’s enough time to take in information, but not enough for detailed reading. Actual clicks within the AIO were rare. Just 19% of mobile users clicked on any citation-related links, and on desktop, it dropped to only 7.4%. On computers, AIOs often lacked clickable elements altogether.

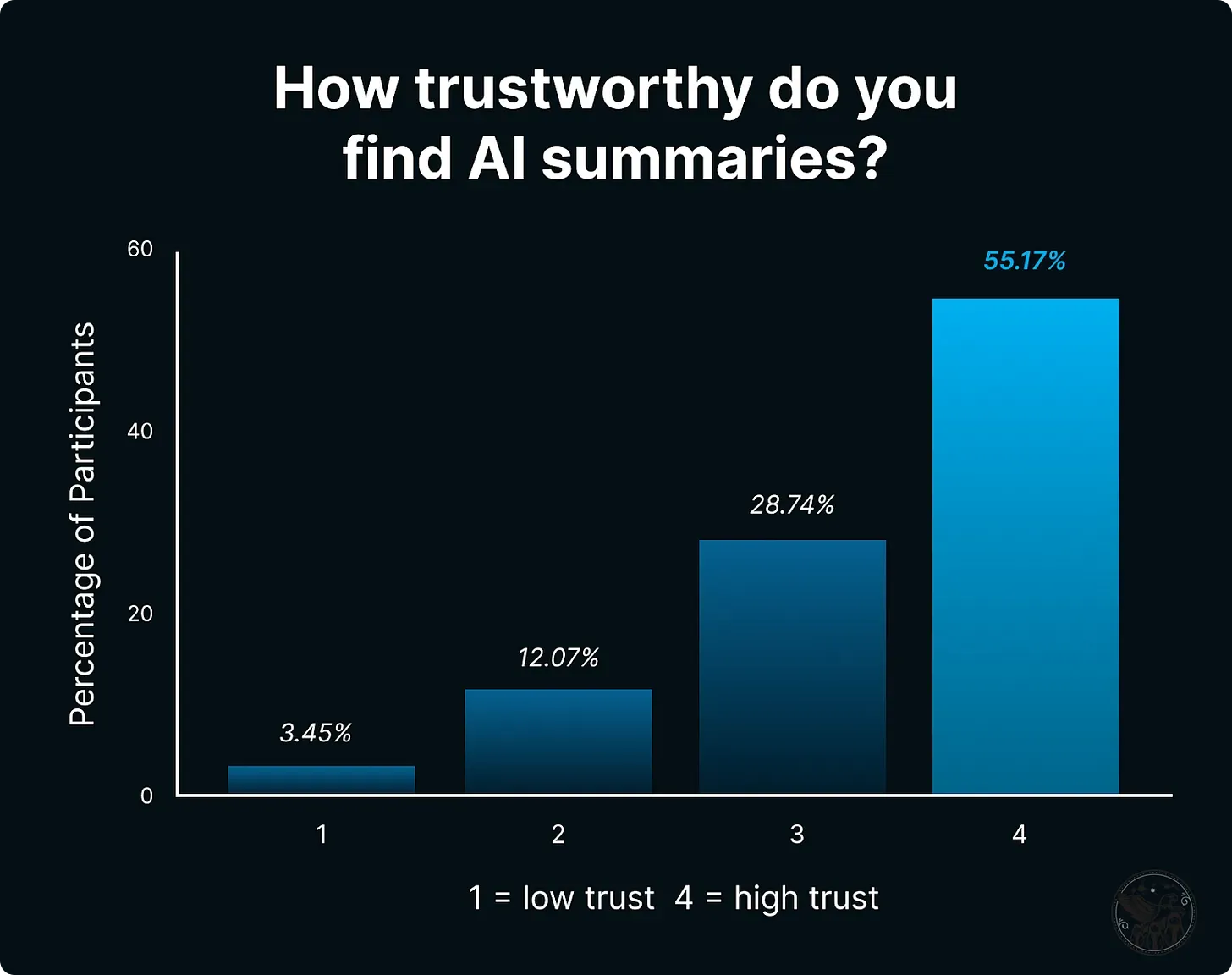

Trust was a central theme in the study. When a known and credible source appeared near the top of an AIO, people were more likely to believe the information and keep reading. There was a measurable link between how far someone scrolled and how much they trusted the result. This relationship had a Spearman correlation of 0.38—moderately strong. On average, users rated their trust in AIOs at 3.4 out of 5. But that trust varied widely depending on the type of query. For simple questions like finding a discount code, people trusted the AI easily. But for more serious topics, like money or health advice, they were much more skeptical.

The kind of task affected how deeply people engaged. Health-related searches led to a 52% scroll depth, while DIY tasks averaged 54%. Financial questions came in at 46%, and timing-related queries—like figuring out the best month to buy a car—averaged 41%. For coupon-related searches, scroll depth was only 34%. People often said things like, “I like the AIO, but I still check Reddit,” showing they wanted to cross-check before fully trusting what the AI presented. Videos and trusted community forums played a big role in filling those trust gaps.

When people left the AIO, they didn’t usually go to traditional blogs or news sites. Instead, they headed to places like Reddit or YouTube. About a third of the outbound traffic went to these community-based platforms. Among younger users, there was a clear preference for peer-reviewed content. Even if the AIO had a good answer, these users wanted to hear what other real people had to say first.

Age and device type influenced behavior in noticeable ways. People between 25 and 34 years old were the most comfortable with AIOs. Many of them took the AI summary as the final answer. Older participants tended to dig deeper. They clicked more often on traditional links and voiced more doubt when reviewing AI content. Mobile users scrolled more frequently and clicked more often, possibly because mobile screens make it easier to keep moving vertically. In contrast, desktop users tended to stay put.

Even though many feared AIOs would completely stop traffic to websites, 80% of users still explored other parts of the search results. But most didn’t interact much within the AIO. They moved on to organic links, discussion threads, or interactive features like “People Also Ask.” This shows that while being included in an AIO might boost credibility, it doesn’t automatically drive traffic.

People now decide where to click using a new mental process. First, they ask themselves if they trust the source. Only then do they decide if the answer is useful. Recognition plays a big role—well-known websites and trusted brands were clicked far more often than lesser-known ones, even if the unknown sources had better summaries. Over 70% of the time, users chose a result that was already visible on the screen without scrolling.

The emotional side of search also came through in the data. In 38% of cases, users opened a second result just to feel more confident about the AIO answer. Health and financial searches made people pause and think more. When the AI cited unfamiliar brands, some users hesitated or said they didn’t trust it. For simpler topics like local shopping or basic facts, people browsed quietly and without much reaction.

Charts: Kevin Indig

For online shopping, AIOs didn’t carry much weight. People focused more on product carousels, paid ads, and shopping modules. Many even said they’d rather just go straight to Amazon. In how-to searches, videos caught the most attention. If users saw a useful thumbnail or a quick preview in motion, they clicked through to YouTube. These video results made up less than 2% of all elements, yet they had longer dwell times—around 37 seconds, even more than the AIOs.

All of this points to a big shift in how success should be measured online. Click-through rate no longer tells the full story. Marketers now need to ask different questions: How often does our content appear in an AIO? How high up does it show? Do people recognize our brand when they see it there? These visibility metrics are becoming more valuable than raw traffic numbers.

The ripple effects for brands and publishers are serious. Sites that make money through clicks—like those running ads or affiliate programs—are likely to feel the impact. With fewer users clicking away from the search results, old revenue models will need an update. Going forward, the focus has to be on building credibility. That means earning mentions from trusted sources like .gov and .edu sites, having expert authors, and creating content that users recognize and trust right away.

In short, the rules of search have changed. We’ve moved from a world where ranking high meant getting clicks, to a world where visibility and trust matter more than anything. People scan quickly, make fast judgments, and only click when they’re confident. If your brand isn’t already familiar, or doesn’t show up early in the results, you may never be seen.

This new reality requires a fresh approach to SEO. Metrics and goals need to evolve. Google’s AI Overviews aren’t just an add-on feature—they’re reshaping how users find and evaluate information. And now, thanks to this research, we have the data to prove it.

This study used UXtweak to capture all user interactions. The final dataset includes 408 fully analyzed AIO interactions, gathered from U.S. participants during March and April of 2025.

Read next:

• Google Homepage Pushes AI Mode Forward, Leaves 'I'm Feeling Lucky' Button Behind

• From Selfies to Secret Phrases: How Simple Tactics Are Protecting People from Scammers

The researchers from GrowthMemo compiled over 525 recordings, of which about 400 involved interactions with AI-generated content. They didn’t just look at which links were clicked. They measured how far people scrolled, how long they lingered on different parts of the screen, when they chose to click, and how much trust they expressed in what they saw. Of the participants, 42 were on mobile devices and 27 used desktops, giving the study a good view of how device type affects behavior.

One of the most significant takeaways is how dramatically AI Overviews impact website traffic. When an AIO appeared in search results, the number of clicks to outside websites dropped. On desktop, outbound clicks fell by nearly two-thirds. On mobile, it was almost half. People didn’t need to leave the page to get answers. Nearly 88% of participants expanded the AIO by tapping the “show more” button. But their attention didn’t go much further. Although the average scroll depth was 75%, the median was only 30%—most people didn’t bother reading past the first third of the AI summary. If a source didn’t appear early in the AIO, it was effectively invisible.

Rather than reading the summaries word-for-word, most participants skimmed. About 86% quickly scanned the content for helpful points or recognizable sources. On average, people spent 30 to 45 seconds engaging with the AIO. That’s enough time to take in information, but not enough for detailed reading. Actual clicks within the AIO were rare. Just 19% of mobile users clicked on any citation-related links, and on desktop, it dropped to only 7.4%. On computers, AIOs often lacked clickable elements altogether.

Trust was a central theme in the study. When a known and credible source appeared near the top of an AIO, people were more likely to believe the information and keep reading. There was a measurable link between how far someone scrolled and how much they trusted the result. This relationship had a Spearman correlation of 0.38—moderately strong. On average, users rated their trust in AIOs at 3.4 out of 5. But that trust varied widely depending on the type of query. For simple questions like finding a discount code, people trusted the AI easily. But for more serious topics, like money or health advice, they were much more skeptical.

The kind of task affected how deeply people engaged. Health-related searches led to a 52% scroll depth, while DIY tasks averaged 54%. Financial questions came in at 46%, and timing-related queries—like figuring out the best month to buy a car—averaged 41%. For coupon-related searches, scroll depth was only 34%. People often said things like, “I like the AIO, but I still check Reddit,” showing they wanted to cross-check before fully trusting what the AI presented. Videos and trusted community forums played a big role in filling those trust gaps.

When people left the AIO, they didn’t usually go to traditional blogs or news sites. Instead, they headed to places like Reddit or YouTube. About a third of the outbound traffic went to these community-based platforms. Among younger users, there was a clear preference for peer-reviewed content. Even if the AIO had a good answer, these users wanted to hear what other real people had to say first.

Age and device type influenced behavior in noticeable ways. People between 25 and 34 years old were the most comfortable with AIOs. Many of them took the AI summary as the final answer. Older participants tended to dig deeper. They clicked more often on traditional links and voiced more doubt when reviewing AI content. Mobile users scrolled more frequently and clicked more often, possibly because mobile screens make it easier to keep moving vertically. In contrast, desktop users tended to stay put.

Even though many feared AIOs would completely stop traffic to websites, 80% of users still explored other parts of the search results. But most didn’t interact much within the AIO. They moved on to organic links, discussion threads, or interactive features like “People Also Ask.” This shows that while being included in an AIO might boost credibility, it doesn’t automatically drive traffic.

People now decide where to click using a new mental process. First, they ask themselves if they trust the source. Only then do they decide if the answer is useful. Recognition plays a big role—well-known websites and trusted brands were clicked far more often than lesser-known ones, even if the unknown sources had better summaries. Over 70% of the time, users chose a result that was already visible on the screen without scrolling.

The emotional side of search also came through in the data. In 38% of cases, users opened a second result just to feel more confident about the AIO answer. Health and financial searches made people pause and think more. When the AI cited unfamiliar brands, some users hesitated or said they didn’t trust it. For simpler topics like local shopping or basic facts, people browsed quietly and without much reaction.

Charts: Kevin Indig

For online shopping, AIOs didn’t carry much weight. People focused more on product carousels, paid ads, and shopping modules. Many even said they’d rather just go straight to Amazon. In how-to searches, videos caught the most attention. If users saw a useful thumbnail or a quick preview in motion, they clicked through to YouTube. These video results made up less than 2% of all elements, yet they had longer dwell times—around 37 seconds, even more than the AIOs.

All of this points to a big shift in how success should be measured online. Click-through rate no longer tells the full story. Marketers now need to ask different questions: How often does our content appear in an AIO? How high up does it show? Do people recognize our brand when they see it there? These visibility metrics are becoming more valuable than raw traffic numbers.

The ripple effects for brands and publishers are serious. Sites that make money through clicks—like those running ads or affiliate programs—are likely to feel the impact. With fewer users clicking away from the search results, old revenue models will need an update. Going forward, the focus has to be on building credibility. That means earning mentions from trusted sources like .gov and .edu sites, having expert authors, and creating content that users recognize and trust right away.

In short, the rules of search have changed. We’ve moved from a world where ranking high meant getting clicks, to a world where visibility and trust matter more than anything. People scan quickly, make fast judgments, and only click when they’re confident. If your brand isn’t already familiar, or doesn’t show up early in the results, you may never be seen.

This new reality requires a fresh approach to SEO. Metrics and goals need to evolve. Google’s AI Overviews aren’t just an add-on feature—they’re reshaping how users find and evaluate information. And now, thanks to this research, we have the data to prove it.

This study used UXtweak to capture all user interactions. The final dataset includes 408 fully analyzed AIO interactions, gathered from U.S. participants during March and April of 2025.

Read next:

• Google Homepage Pushes AI Mode Forward, Leaves 'I'm Feeling Lucky' Button Behind

• From Selfies to Secret Phrases: How Simple Tactics Are Protecting People from Scammers